11 Most Important Metrics to Track for AI Search Success in 2026

11 Most Important Metrics to Track for AI Search Success in 2026

11 Most Important Metrics to Track for AI Search Success in 2026

Track what actually matters in 2026: 11 essential AI Search metrics across ChatGPT, Perplexity, Gemini, and Claude to measure visibility, citations, traffic, and real business impact.

Niclas Aunin

Niclas Aunin

Niclas Aunin

Jan 7, 2026

Jan 7, 2026

Jan 7, 2026

11 Most Important Metrics to Track for AI Search Success in 2026

However you call it GEO, AEO or ALLMO, AI search optimization demands a new measurement framework beyond traditional SEO. This guide introduces you to the 11 most important metrics to track for AI Search across ChatGPT, Perplexity, Gemini, and Claude to capture the full impact of AI search for your business.

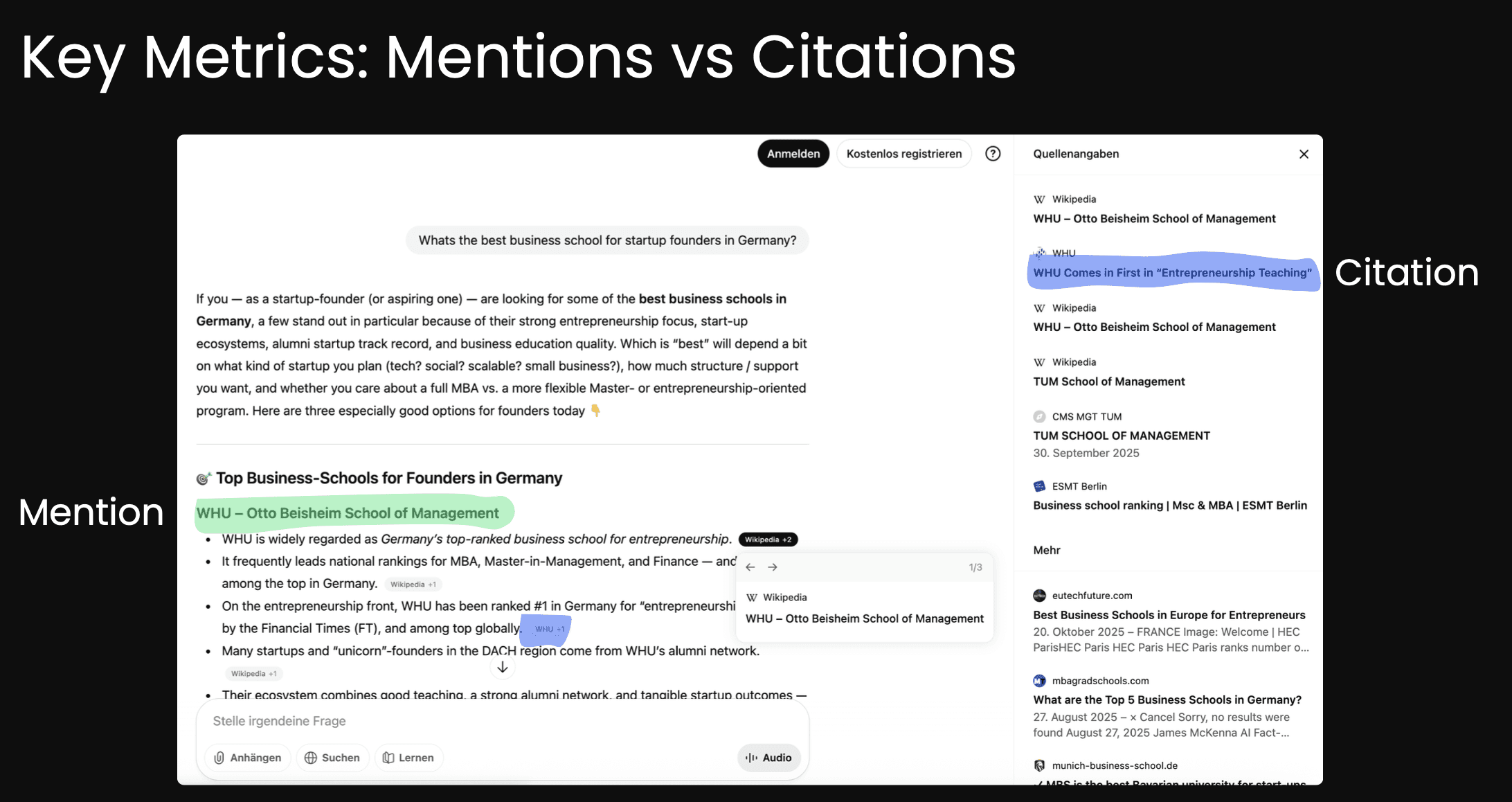

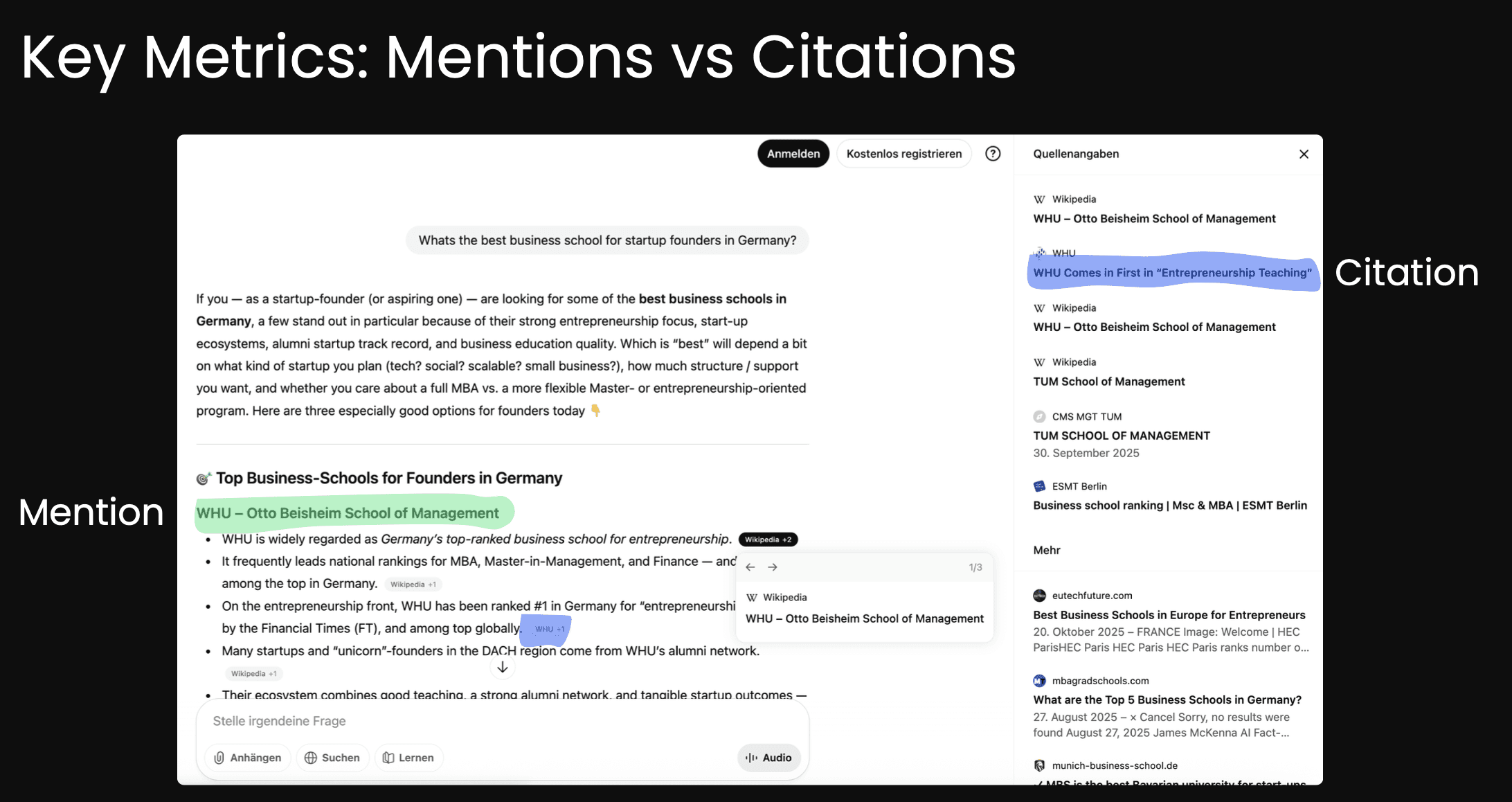

Metric 1: Brand Mentions

LLMO starts with visibility inside AI answers. When ChatGPT, Perplexity, Gemini, or Claude respond to user queries, the first question is whether your brand appears at all—and whether the assistant treats your content as authoritative enough to cite.

Brand mentions in answers measure how often AI assistants explicitly name your brand within their responses. Track mention frequency by engine and by a representative set of prompts that map to your solution areas. This metric functions as share-of-mind in the AI era. The conversational equivalent of appearing in traditional search results. Tools like specialized LLM visibility trackers now log mention rates across engines, letting you monitor narrative control and competitive positioning.

Metric 2: Citations

Domain citations or source links capture when your pages are cited as sources with clickable URLs. Perplexity is consistently citation-forward, displaying numbered source lists in nearly every response. ChatGPT, Gemini, and Claude vary—citation behavior depends on mode, configuration, and user context. Domain citations are precursors to referral traffic and serve as a proxy for perceived authority. Each citation signals that the model's retrieval or RAG pipeline views your content as trustworthy and relevant.

Industry vendors now position citations as "the new backlinks," reflecting their dual role in reputation-building and traffic generation. While AI referral volumes remain small today, citations also trigger downstream effects, branded searches, view-through conversions, and assisted revenue, that extend far beyond immediate clicks.

Metric 3: Share of Voice (presence and prominence inside answers)

Appearing in an AI answer is the first hurdle; where you appear is the next. Visibility and position metrics reveal whether your brand is prominent or buried within responses.

AI visibility or share-of-voice measures your presence across a representative prompt set and across target engines. Calculate how often you appear when users ask high-intent questions in your category. Use tool-reported visibility scores as a baseline and complement with manual spot checks, since different platforms define visibility differently. A rising visibility score indicates your content is increasingly surfaced by models, even if immediate referral traffic lags.

Metric 4: Position

Average AI position or inclusion order gauges where you appear in source lists or within the reply body, first cited source versus third or fourth, or mentioned early in the text versus buried at the end. Higher inclusion order typically correlates with clicks, especially in Perplexity, where users scan numbered sources top-to-bottom. ChatGPT and Gemini position also matters when users evaluate which sources to trust. Track average position over time to identify whether optimization efforts are moving you up in model outputs.

Together, visibility and position metrics help you prioritize content gaps. If visibility is low on high-demand prompts, invest in structured, authoritative content. If visibility is strong but position is poor, refine formatting, recency, and depth to increase perceived relevance.

However, position is shown to vary the most.

Metric 5: Traffic referral from AI (clicks you can count)

AI citations generate measurable traffic, though volumes remain modest compared to traditional search. Tracking both direct referrals and broader AI-influenced sessions provides a clearer picture.

AI referral clicks are direct, attributed visits from assistants, sessions where the referrer is chat.openai.com, perplexity.ai, gemini.google.com, or claude.ai. Set up GA4 channel groups using regex patterns to isolate these referrers. Early benchmarks show AI referrals comprise roughly 0.5–1% of total site traffic, with ChatGPT typically driving the majority (70–87% of measured AI referrals according to industry reports). While volumes are small, they're growing month-over-month and often represent high-intent users.

Metric 6: AI attributed traffic

Overall AI-attributed sessions expand the lens beyond strict referrers to include likely AI-influenced visits that land as Direct or Unassigned. Many AI assistants omit referrer data or users copy-paste URLs without clicking through, causing visits to appear as direct traffic. Use channel grouping rules, landing-page patterns (e.g., deep informational pages with no prior site history), and time-bound association with known AI citation exposures to estimate this broader pool. Consider creating a custom "AI-Influenced" segment in GA4 that flags sessions landing on pages you know are frequently cited by LLMs.

Complement analytics with qualitative signals: add a "How did you hear about us?" field in forms and a discovery question in sales calls to capture self-reported AI influence.

Together, Metric 5 and 6 two traffic metrics provide lower-bound (strict referrals) and upper-bound (attributed sessions) estimates of AI-driven visits. Both are essential because zero-click answers and missing referrers mean traditional analytics will systematically undercount LLM influence.

Metric 7: Conversions (prove impact, not just presence)

Visibility and traffic are inputs; conversions and revenue are outcomes. Measuring how AI-referred visitors behave on-site and downstream closes the loop on ALLMO ROI.

On-site conversion rate from AI traffic compares the conversion rate and engagement depth of AI-referred or AI-attributed sessions against other channels. Many early adopters observe higher intent and longer session duration among AI visitors, likely because users arrive after reading a detailed, synthesized answer that pre-qualifies their interest. Validate this pattern with your own data if AI visitors convert at 2–3× the rate of organic search, that justifies prioritizing AEO/ALLMO even at lower traffic volumes.

Metric 8: Value

Assisted conversions or influenced revenue captures the downstream impact when an AI answer satisfies the initial query but drives a later brand search or direct conversion.

Conversion and value metrics transform applied LLMO from a visibility exercise into a revenue conversation. When you can show that a small number of AI citations generated significant assisted revenue, ALLMO becomes a strategic priority rather than an experimental side project.

Metric 9: Influence (what AI exposure triggers next)

AI citations often generate indirect effects that never appear in referrer logs. Influence and coverage metrics capture these hidden outcomes and identify strategic gaps.

Branded search lift tracks increases in branded queries following AI citation or mention spikes. Pull branded search volume from Google Search Console and establish trend baselines. When you observe a citation surge in ChatGPT or Perplexity answers, monitor whether branded search volume rises 1–2 weeks later. This pattern reveals zero-click influence, users who read about your brand in an AI answer and later search for you directly without clicking through. Case studies report substantial branded search gains after ALLMO investments, often exceeding immediate referral clicks by 3–5×.

Metric 10: Coverage (where you are present)

Engine and prompt coverage monitors where your brand is present (or absent) across ChatGPT, Perplexity, Gemini, and Claude, and across high-demand prompts. Build a representative prompt set, start with 50–200 high-intent prompts per solution area, and log which engines surface your content for each prompt. Prioritize gaps where prompt demand is high (many users ask the question) but your brand is absent or under-cited. Use coverage dashboards to identify competitive vulnerabilities: if a rival appears in 80% of Perplexity answers for a category and you appear in 20%, that's a clear content opportunity.

Together, influence and coverage metrics reveal the strategic landscape. Branded lift quantifies hidden impact; coverage analysis directs where to invest next.

Metric 11: Earned Backlinks

Earned backlinks measure how often your content is cited or referenced in AI generated answers across models like ChatGPT, Perplexity, and Gemini. Unlike traditional backlinks, these mentions typically appear inside AI responses or source lists, signaling that your content is considered a reliable go to reference for a given topic.

Frequent AI citations indicate that your content works. It is understandable, authoritative, and easy for models to retrieve and reuse. Over time, this creates a flywheel: cited content is surfaced more often, becomes more familiar to models, and is increasingly treated as a trusted source in future answers.

Track earned backlinks by monitoring backlinks in Google Search Console or a backlink tool of your choice. Rising backlinks in what looks like AI generated content suggests growing trust and relevance. Flat or declining citations point to gaps in authority, clarity, or coverage compared to competing sources.

How to measure these metrics (instrumentation that works now)

Translating these 11 KPIs into dashboards requires a mix of analytics configuration, specialized tools, and attribution logic.

LLM visibility trackers provide mention, citation, position, and visibility scores that GA4 cannot. Tools like ALLMO.ai, and similar platforms log which prompts produce answers that cite your domain, track unlinked brand mentions, and report average position per engine. Perplexity's transparent source lists make it straightforward to audit citations manually; ChatGPT, Gemini, and Claude require prompt sets and periodic sampling.

GA4, Google Search Console and Looker Studio form the foundation for traffic and conversion tracking. Create a custom channel group in GA4 with regex rules to match AI referrers: chat\.openai\.com|perplexity\.ai|gemini\.google\.com|claude\.ai. Add secondary dimensions for landing page, UTM parameters (if you append them to outbound links), and user demographics. Build a Looker Studio dashboard with sections for AI referral volume, conversion rate by AI channel, and weekly trend charts. Include a comparison table showing AI traffic vs. organic search vs. direct on engagement and conversion metrics.

Attribution guardrails address referrer gaps and zero-click answers. Supplement direct analytics with brand-lift tracking (Search Console branded queries), view-through attribution windows in GA4 (associate prior sessions or impressions with later conversions), and qualitative capture. Add a form field or CRM question: "How did you first hear about us?" with an option for "AI assistant (ChatGPT, Perplexity, etc.)." Sales teams should ask discovery questions to surface AI influence.

This three-layer approach using existing platforms for analytics and attribution enhancements, as well as a specialist AI search visibility platform - provides the measurement spine for AI Search Readiness without requiring a full marketing-tech overhaul.

Model nuances that shape metric behavior

Not all AI assistants behave the same way. Understanding platform-specific citation patterns helps you interpret metrics correctly and tailor content strategies.

ChatGPT is often the largest source of measurable AI referrals in current benchmarks, accounting for 70–87% of AI-driven traffic in many datasets. Linking behavior and referrer parameters vary by mode, browsing with Bing integration may produce different citation patterns than internal reasoning modes. ChatGPT's referrer consistency has improved over time, but free-tier users and certain workflows may still omit referrer data. Validate ChatGPT traffic regularly and, if you control outbound links in cited content, append UTM parameters to improve trackability.

Perplexity consistently surfaces numbered, clickable sources in its answer UI, making it the gold standard for citation transparency. Users can see and click sources easily, and competitive analysis is straightforward, search a prompt in Perplexity and inspect which domains are cited. Perplexity is heavily used by researchers and analysts, so expect higher engagement depth from these visitors. Track citation count, inclusion order, and click-through rate for Perplexity separately to benchmark your performance in this citation-heavy environment.

Gemini and Claude show more variable citation behavior. Linking and referrer consistency depend on configuration, user settings, and product updates. Visibility and mention tracking are valuable for these platforms, but expect less reliable referral data in GA4. Treat Gemini and Claude as strategic coverage targets, monitor whether your brand appears in answers and prioritize maintaining presence.

Understanding these nuances prevents misinterpretation. A spike in citations on Perplexity should generate measurable referral traffic; a spike in ChatGPT mentions may produce branded lift without immediate clicks; Gemini or Claude mentions may require manual verification before drawing conclusions.

Key Takeaways

Include ChatGPT in your sign-up survey, if you ask new leads "How did you find out about us?".

Use a three-layer measurement stack: GA4 and GSC for traffic and conversions, specialized LLM trackers for mentions and citations, and if you want advanced tracking use attribution guardrails (brand-lift tracking, view-through windows, qualitative capture) to address zero-click and missing-referrer gaps.

Branded search lift often exceeds direct referral clicks by 3–5×, capturing zero-click influence when users read AI answers and later search your brand directly without clicking through.

Build a 50–200 prompt set per solution area and refresh quarterly to track visibility and coverage gaps; prioritize high-demand prompts where competitors appear but you don't.

AI-referred visitors often show 2–3× higher conversion rates than organic search in early case studies, justifying LLMO investment even at low traffic volumes.

11 Most Important Metrics to Track for AI Search Success in 2026

However you call it GEO, AEO or ALLMO, AI search optimization demands a new measurement framework beyond traditional SEO. This guide introduces you to the 11 most important metrics to track for AI Search across ChatGPT, Perplexity, Gemini, and Claude to capture the full impact of AI search for your business.

Metric 1: Brand Mentions

LLMO starts with visibility inside AI answers. When ChatGPT, Perplexity, Gemini, or Claude respond to user queries, the first question is whether your brand appears at all—and whether the assistant treats your content as authoritative enough to cite.

Brand mentions in answers measure how often AI assistants explicitly name your brand within their responses. Track mention frequency by engine and by a representative set of prompts that map to your solution areas. This metric functions as share-of-mind in the AI era. The conversational equivalent of appearing in traditional search results. Tools like specialized LLM visibility trackers now log mention rates across engines, letting you monitor narrative control and competitive positioning.

Metric 2: Citations

Domain citations or source links capture when your pages are cited as sources with clickable URLs. Perplexity is consistently citation-forward, displaying numbered source lists in nearly every response. ChatGPT, Gemini, and Claude vary—citation behavior depends on mode, configuration, and user context. Domain citations are precursors to referral traffic and serve as a proxy for perceived authority. Each citation signals that the model's retrieval or RAG pipeline views your content as trustworthy and relevant.

Industry vendors now position citations as "the new backlinks," reflecting their dual role in reputation-building and traffic generation. While AI referral volumes remain small today, citations also trigger downstream effects, branded searches, view-through conversions, and assisted revenue, that extend far beyond immediate clicks.

Metric 3: Share of Voice (presence and prominence inside answers)

Appearing in an AI answer is the first hurdle; where you appear is the next. Visibility and position metrics reveal whether your brand is prominent or buried within responses.

AI visibility or share-of-voice measures your presence across a representative prompt set and across target engines. Calculate how often you appear when users ask high-intent questions in your category. Use tool-reported visibility scores as a baseline and complement with manual spot checks, since different platforms define visibility differently. A rising visibility score indicates your content is increasingly surfaced by models, even if immediate referral traffic lags.

Metric 4: Position

Average AI position or inclusion order gauges where you appear in source lists or within the reply body, first cited source versus third or fourth, or mentioned early in the text versus buried at the end. Higher inclusion order typically correlates with clicks, especially in Perplexity, where users scan numbered sources top-to-bottom. ChatGPT and Gemini position also matters when users evaluate which sources to trust. Track average position over time to identify whether optimization efforts are moving you up in model outputs.

Together, visibility and position metrics help you prioritize content gaps. If visibility is low on high-demand prompts, invest in structured, authoritative content. If visibility is strong but position is poor, refine formatting, recency, and depth to increase perceived relevance.

However, position is shown to vary the most.

Metric 5: Traffic referral from AI (clicks you can count)

AI citations generate measurable traffic, though volumes remain modest compared to traditional search. Tracking both direct referrals and broader AI-influenced sessions provides a clearer picture.

AI referral clicks are direct, attributed visits from assistants, sessions where the referrer is chat.openai.com, perplexity.ai, gemini.google.com, or claude.ai. Set up GA4 channel groups using regex patterns to isolate these referrers. Early benchmarks show AI referrals comprise roughly 0.5–1% of total site traffic, with ChatGPT typically driving the majority (70–87% of measured AI referrals according to industry reports). While volumes are small, they're growing month-over-month and often represent high-intent users.

Metric 6: AI attributed traffic

Overall AI-attributed sessions expand the lens beyond strict referrers to include likely AI-influenced visits that land as Direct or Unassigned. Many AI assistants omit referrer data or users copy-paste URLs without clicking through, causing visits to appear as direct traffic. Use channel grouping rules, landing-page patterns (e.g., deep informational pages with no prior site history), and time-bound association with known AI citation exposures to estimate this broader pool. Consider creating a custom "AI-Influenced" segment in GA4 that flags sessions landing on pages you know are frequently cited by LLMs.

Complement analytics with qualitative signals: add a "How did you hear about us?" field in forms and a discovery question in sales calls to capture self-reported AI influence.

Together, Metric 5 and 6 two traffic metrics provide lower-bound (strict referrals) and upper-bound (attributed sessions) estimates of AI-driven visits. Both are essential because zero-click answers and missing referrers mean traditional analytics will systematically undercount LLM influence.

Metric 7: Conversions (prove impact, not just presence)

Visibility and traffic are inputs; conversions and revenue are outcomes. Measuring how AI-referred visitors behave on-site and downstream closes the loop on ALLMO ROI.

On-site conversion rate from AI traffic compares the conversion rate and engagement depth of AI-referred or AI-attributed sessions against other channels. Many early adopters observe higher intent and longer session duration among AI visitors, likely because users arrive after reading a detailed, synthesized answer that pre-qualifies their interest. Validate this pattern with your own data if AI visitors convert at 2–3× the rate of organic search, that justifies prioritizing AEO/ALLMO even at lower traffic volumes.

Metric 8: Value

Assisted conversions or influenced revenue captures the downstream impact when an AI answer satisfies the initial query but drives a later brand search or direct conversion.

Conversion and value metrics transform applied LLMO from a visibility exercise into a revenue conversation. When you can show that a small number of AI citations generated significant assisted revenue, ALLMO becomes a strategic priority rather than an experimental side project.

Metric 9: Influence (what AI exposure triggers next)

AI citations often generate indirect effects that never appear in referrer logs. Influence and coverage metrics capture these hidden outcomes and identify strategic gaps.

Branded search lift tracks increases in branded queries following AI citation or mention spikes. Pull branded search volume from Google Search Console and establish trend baselines. When you observe a citation surge in ChatGPT or Perplexity answers, monitor whether branded search volume rises 1–2 weeks later. This pattern reveals zero-click influence, users who read about your brand in an AI answer and later search for you directly without clicking through. Case studies report substantial branded search gains after ALLMO investments, often exceeding immediate referral clicks by 3–5×.

Metric 10: Coverage (where you are present)

Engine and prompt coverage monitors where your brand is present (or absent) across ChatGPT, Perplexity, Gemini, and Claude, and across high-demand prompts. Build a representative prompt set, start with 50–200 high-intent prompts per solution area, and log which engines surface your content for each prompt. Prioritize gaps where prompt demand is high (many users ask the question) but your brand is absent or under-cited. Use coverage dashboards to identify competitive vulnerabilities: if a rival appears in 80% of Perplexity answers for a category and you appear in 20%, that's a clear content opportunity.

Together, influence and coverage metrics reveal the strategic landscape. Branded lift quantifies hidden impact; coverage analysis directs where to invest next.

Metric 11: Earned Backlinks

Earned backlinks measure how often your content is cited or referenced in AI generated answers across models like ChatGPT, Perplexity, and Gemini. Unlike traditional backlinks, these mentions typically appear inside AI responses or source lists, signaling that your content is considered a reliable go to reference for a given topic.

Frequent AI citations indicate that your content works. It is understandable, authoritative, and easy for models to retrieve and reuse. Over time, this creates a flywheel: cited content is surfaced more often, becomes more familiar to models, and is increasingly treated as a trusted source in future answers.

Track earned backlinks by monitoring backlinks in Google Search Console or a backlink tool of your choice. Rising backlinks in what looks like AI generated content suggests growing trust and relevance. Flat or declining citations point to gaps in authority, clarity, or coverage compared to competing sources.

How to measure these metrics (instrumentation that works now)

Translating these 11 KPIs into dashboards requires a mix of analytics configuration, specialized tools, and attribution logic.

LLM visibility trackers provide mention, citation, position, and visibility scores that GA4 cannot. Tools like ALLMO.ai, and similar platforms log which prompts produce answers that cite your domain, track unlinked brand mentions, and report average position per engine. Perplexity's transparent source lists make it straightforward to audit citations manually; ChatGPT, Gemini, and Claude require prompt sets and periodic sampling.

GA4, Google Search Console and Looker Studio form the foundation for traffic and conversion tracking. Create a custom channel group in GA4 with regex rules to match AI referrers: chat\.openai\.com|perplexity\.ai|gemini\.google\.com|claude\.ai. Add secondary dimensions for landing page, UTM parameters (if you append them to outbound links), and user demographics. Build a Looker Studio dashboard with sections for AI referral volume, conversion rate by AI channel, and weekly trend charts. Include a comparison table showing AI traffic vs. organic search vs. direct on engagement and conversion metrics.

Attribution guardrails address referrer gaps and zero-click answers. Supplement direct analytics with brand-lift tracking (Search Console branded queries), view-through attribution windows in GA4 (associate prior sessions or impressions with later conversions), and qualitative capture. Add a form field or CRM question: "How did you first hear about us?" with an option for "AI assistant (ChatGPT, Perplexity, etc.)." Sales teams should ask discovery questions to surface AI influence.

This three-layer approach using existing platforms for analytics and attribution enhancements, as well as a specialist AI search visibility platform - provides the measurement spine for AI Search Readiness without requiring a full marketing-tech overhaul.

Model nuances that shape metric behavior

Not all AI assistants behave the same way. Understanding platform-specific citation patterns helps you interpret metrics correctly and tailor content strategies.

ChatGPT is often the largest source of measurable AI referrals in current benchmarks, accounting for 70–87% of AI-driven traffic in many datasets. Linking behavior and referrer parameters vary by mode, browsing with Bing integration may produce different citation patterns than internal reasoning modes. ChatGPT's referrer consistency has improved over time, but free-tier users and certain workflows may still omit referrer data. Validate ChatGPT traffic regularly and, if you control outbound links in cited content, append UTM parameters to improve trackability.

Perplexity consistently surfaces numbered, clickable sources in its answer UI, making it the gold standard for citation transparency. Users can see and click sources easily, and competitive analysis is straightforward, search a prompt in Perplexity and inspect which domains are cited. Perplexity is heavily used by researchers and analysts, so expect higher engagement depth from these visitors. Track citation count, inclusion order, and click-through rate for Perplexity separately to benchmark your performance in this citation-heavy environment.

Gemini and Claude show more variable citation behavior. Linking and referrer consistency depend on configuration, user settings, and product updates. Visibility and mention tracking are valuable for these platforms, but expect less reliable referral data in GA4. Treat Gemini and Claude as strategic coverage targets, monitor whether your brand appears in answers and prioritize maintaining presence.

Understanding these nuances prevents misinterpretation. A spike in citations on Perplexity should generate measurable referral traffic; a spike in ChatGPT mentions may produce branded lift without immediate clicks; Gemini or Claude mentions may require manual verification before drawing conclusions.

Key Takeaways

Include ChatGPT in your sign-up survey, if you ask new leads "How did you find out about us?".

Use a three-layer measurement stack: GA4 and GSC for traffic and conversions, specialized LLM trackers for mentions and citations, and if you want advanced tracking use attribution guardrails (brand-lift tracking, view-through windows, qualitative capture) to address zero-click and missing-referrer gaps.

Branded search lift often exceeds direct referral clicks by 3–5×, capturing zero-click influence when users read AI answers and later search your brand directly without clicking through.

Build a 50–200 prompt set per solution area and refresh quarterly to track visibility and coverage gaps; prioritize high-demand prompts where competitors appear but you don't.

AI-referred visitors often show 2–3× higher conversion rates than organic search in early case studies, justifying LLMO investment even at low traffic volumes.

Check out more articles

Start your AI Search Optimization journey today!

Applied Large Language Model Optimization (ALLMO), also known as GEO/AEO is gaining strong momentum.